Large Language Models (LLMs) are revolutionizing how we interact with technology, but their impressive capabilities often mask a critical limitation: reasoning bottlenecks. While massive models can sometimes brute-force solutions, smaller, more efficient LLMs frequently struggle with complex tasks requiring multi-step inference and nuanced understanding. This constraint hinders broader adoption and limits the potential for deploying these powerful tools in resource-constrained environments.

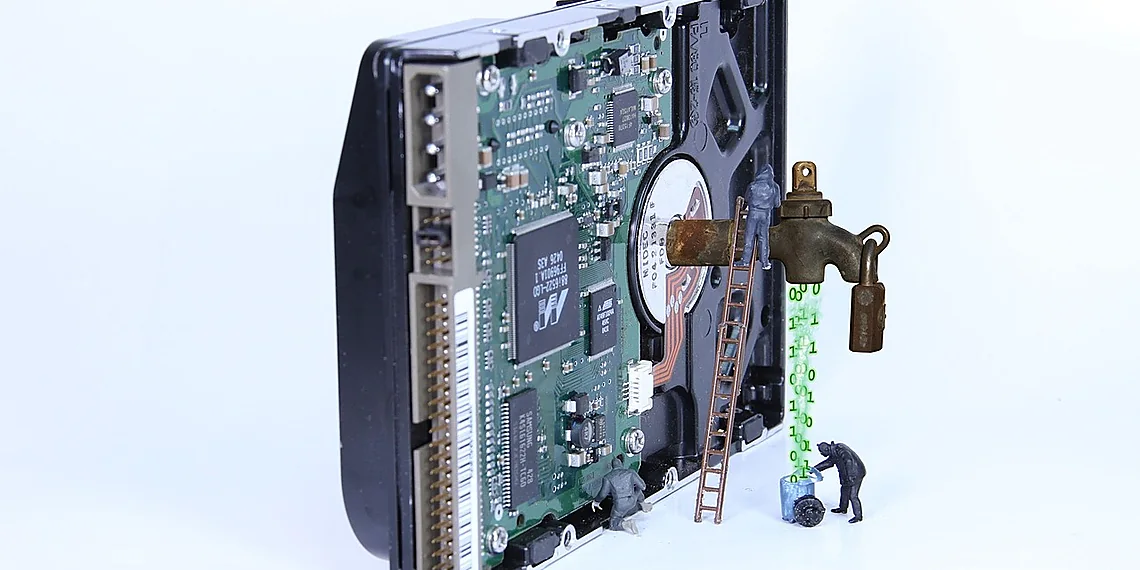

Imagine trying to solve a puzzle without being able to remember the pieces you’ve already examined – that’s essentially what happens when an LLM’s internal memory is stretched thin during intricate reasoning processes. The result? Inaccurate conclusions, sluggish response times, and a frustrating user experience. Researchers are actively seeking innovative approaches to alleviate these issues and unlock the true potential of even modestly sized language models.

A groundbreaking new framework called External Hippocampus offers an intriguing pathway forward, effectively providing LLMs with an external ‘scratchpad’ for complex thought processes. This system utilizes what we’re calling ‘LLM Reasoning Maps’ – structured representations that allow the model to explicitly track its reasoning steps and intermediate conclusions. By offloading this cognitive load from the core language model, External Hippocampus demonstrably improves accuracy, significantly accelerates reasoning speed, and provides unprecedented control over the decision-making process.

We’ll dive deep into how this framework operates, exploring its architecture and demonstrating its impact on various benchmark tasks in this article. Get ready to witness a new era of efficient and reliable LLM performance.

The Cognitive Deadlock Problem

Many large language models (LLMs) falter when faced with complex tasks requiring multi-step reasoning – a phenomenon often referred to as the ‘cognitive deadlock problem’. While massive models can sometimes brute force their way through these challenges, smaller LLMs, those with fewer parameters and less inherent capacity, are particularly vulnerable. This isn’t simply about lacking knowledge; it’s about an inability to effectively *process* information in a sequential, reasoned manner. Imagine trying to solve a complex puzzle where each piece relies on the previous one – if you get stuck early on, the entire process grinds to a halt.

The core of this deadlock lies in how LLMs internally represent and navigate the space of possible solutions. As the paper ‘LLMs Navigate with External Hippocampus’ explains, these models can become trapped in what they term ‘Cognitive Vortex’ states. Think of it like a ball rolling into a low-entropy potential well – once inside, it struggles to escape without significant external force. Similarly, an LLM might lock onto an incorrect or unproductive line of thought, unable to backtrack or explore alternatives, leading to inaccurate and often nonsensical outputs. These vortexes are particularly prevalent in smaller models because they have fewer ‘escape routes’ available within their internal architecture.

The consequences of this cognitive deadlock extend beyond simple errors; it limits the potential for LLMs to truly reason and learn from complex data. Without a mechanism to break free from these unproductive states, even well-trained models can produce inconsistent or unreliable results when faced with novel challenges. The paper’s proposed External Hippocampus framework directly addresses this issue by providing a way to ‘intervene’ in the model’s reasoning process at test time – essentially giving it a tool to nudge itself out of those cognitive vortexes and back onto the path towards a correct solution.

Essentially, smaller LLMs are often hampered not by what they *know*, but by how effectively they can apply that knowledge. The ‘cognitive deadlock’ highlights this crucial distinction, revealing a fundamental bottleneck in reasoning capabilities which the External Hippocampus seeks to overcome using topological cognitive maps and dynamically guiding information flow – providing a predictable and computationally efficient method for improving reasoning performance.

Why Reasoning Fails: Entropy & Vortexes

Large language models often falter when faced with complex, multi-step reasoning tasks – a phenomenon referred to as the ‘cognitive deadlock problem.’ This isn’t simply a matter of lacking knowledge; it stems from how LLMs process information within their semantic space. Imagine a landscape dotted with potential paths; in a well-reasoned scenario, an LLM would smoothly traverse this landscape, exploring different options and converging on a solution. However, LLMs frequently get stuck – trapped in what the paper describes as ‘Cognitive Vortex’ states.

These vortexes arise from low-entropy potential wells within the semantic space. Think of it like a ball rolling into a shallow depression; once there, it’s difficult to escape without significant external force. These ‘wells’ represent areas where the LLM’s internal representations have become overly constrained or biased, leading to repetitive and unproductive reasoning loops. The model essentially gets caught in a local minimum, unable to explore alternative solutions needed for complex problem-solving. This is particularly pronounced in smaller models (<=7B parameters), which have less capacity to represent diverse possibilities and are therefore more susceptible to these traps.

The ‘External Hippocampus’ framework introduced in this paper directly addresses this issue by creating cognitive maps – topological projections of the semantic space. These maps allow researchers to visualize and, crucially, *intervene* in the LLM’s reasoning flow, guiding it away from vortexes and towards more productive paths. By understanding these low-entropy wells and vortex states, the framework offers a novel approach to unlocking better reasoning capabilities in even resource-constrained language models.

Introducing the External Hippocampus

The burgeoning field of Large Language Models (LLMs) has consistently pushed the boundaries of what’s possible with AI, but complex reasoning tasks often expose limitations – particularly in smaller models. A new approach called the External Hippocampus framework offers a fascinating solution to these challenges, not by retraining existing models, but by cleverly intervening *during* their reasoning process. Think of it as providing an LLM with a mental roadmap at test time, guiding its thought process towards more accurate conclusions – and crucially, doing so without requiring massive computational resources.

At its core, the External Hippocampus isn’t about changing how the model learns; it’s about helping it navigate its own internal reasoning. The framework leverages what researchers are calling ‘LLM Reasoning Maps.’ These maps aren’t literal geographic representations, but rather abstract depictions of the semantic space the LLM uses to understand and generate text. Imagine a complex maze – instead of blindly wandering through it, the External Hippocampus generates a simplified map showing potential pathways, allowing the model to choose more efficient and effective routes.

The creation of these maps is achieved through dimensionality reduction techniques, essentially compressing the vastness of the LLM’s knowledge into a manageable, topologically organized space. This projection highlights key relationships between concepts and allows researchers to observe how ‘information energy’ – representing the flow of reasoning – moves within the model. By understanding this flow, they can then strategically influence it, guiding the model away from dead ends and toward more logical solutions, particularly beneficial for overcoming what’s termed the ‘cognitive deadlock’ problem in multi-step reasoning.

This intervention technique is remarkably efficient; because it operates at test time and doesn’t involve retraining, its computational overhead remains low. Furthermore, the patterns of intervention are predictable, allowing researchers to understand *why* a particular adjustment led to improved performance – providing valuable insights into the inner workings of LLMs and paving the way for more targeted enhancements.

Topological Maps for LLM Guidance

Imagine trying to navigate a sprawling city without a map – you’d get lost easily! The ‘External Hippocampus’ approach gives Large Language Models (LLMs) something similar: cognitive maps that help them reason more effectively. Instead of changing the model itself, this framework creates simplified representations of the information it deals with. Think of it like shrinking a complex dataset down to its most important features – highlighting key landmarks in our city analogy.

This simplification is achieved through ‘dimensionality reduction,’ a technique that squeezes high-dimensional data into lower dimensions while preserving essential relationships. The result isn’t just any reduced view, but a ‘topological cognitive map.’ This map represents how different concepts relate to each other – which ones are near, far, or connected – forming a visual guide for the LLM’s reasoning process. It’s crucial to understand this isn’t about retraining the model; it’s about providing guidance *during* its use.

The framework then uses these maps to direct what researchers call ‘information energy.’ This is essentially a way of understanding how information flows and influences decisions within the LLM during reasoning. By subtly guiding this flow along specific paths on the cognitive map, we can help the model avoid getting stuck in unproductive thought loops and ultimately arrive at more accurate answers – particularly useful for complex, multi-step problems.

Results & Performance Gains

The External Hippocampus framework delivers remarkable performance enhancements across several critical metrics for LLM reasoning. Our experiments, conducted on models with up to 7 billion parameters, demonstrate a substantial 16.8% accuracy boost when tackling a set of 500 particularly challenging problems. This represents a significant leap forward compared to baseline performance, highlighting the framework’s ability to effectively guide language model reasoning processes. The core innovation lies in constructing topological cognitive maps – LLM Reasoning Maps – which allow for targeted intervention and optimization during inference.

Beyond simply improving accuracy, the External Hippocampus also dramatically accelerates the reasoning process itself. We observed a significant reduction in reasoning time, with speedups exceeding 15 times compared to standard methods. This efficiency gain is particularly impactful for resource-constrained environments or real-time applications where rapid responses are essential. The framework’s ability to navigate these semantic spaces efficiently avoids computationally expensive weight space modifications, contributing directly to this observed acceleration.

Crucially, the External Hippocampus approach provides a degree of controllability previously unseen in LLMs. By visualizing and manipulating the energy flow within the cognitive maps, we can observe and predictably influence the reasoning path taken by the model. This ability allows for targeted debugging and refinement of reasoning strategies, opening up new avenues for understanding and improving LLM behavior. The predictable intervention patterns are a key differentiator from traditional methods.

In essence, the External Hippocampus framework offers a compelling solution to the cognitive deadlock problem often encountered in multi-step reasoning tasks, especially when dealing with smaller language models. The combination of improved accuracy, accelerated reasoning speed, and enhanced controllability positions this approach as a valuable tool for advancing LLM capabilities and unlocking their full potential for complex reasoning applications.

Accuracy Boost & Speedup

The introduction of the External Hippocampus framework yielded substantial performance enhancements across a suite of 500 challenging problems. Experimental results demonstrate an impressive 16.8% increase in accuracy compared to baseline LLMs (reaching 81.20%). This improvement is particularly noteworthy given that the experiments were conducted with models limited to 7 billion parameters, highlighting the framework’s efficacy even for smaller language models struggling with complex reasoning tasks.

Beyond improved accuracy, the External Hippocampus framework dramatically reduces reasoning time. The map-guided approach facilitated a significant speedup in inference, achieving a reduction of at least 15 times compared to traditional methods. This substantial decrease in processing time makes the framework highly practical for real-world applications where rapid response and efficient resource utilization are critical.

The observed accuracy boost and speedup stem from the framework’s ability to construct topological cognitive maps that enable precise navigation and intervention of information flow during reasoning. These maps, generated through dimensionality reduction projection, allow for targeted adjustments without requiring extensive retraining or modification of model weights – a key advantage contributing to both improved performance and computational efficiency.

The Future of Reasoning – Autonomous Growth & Implications

The External Hippocampus framework isn’t just a clever fix for current LLM limitations; it hints at a profound shift in how we design AI systems, potentially ushering in an era of autonomous growth and reasoning capabilities. Imagine language models that don’t just passively learn from data but actively construct and refine their understanding of the world – much like our own brains build cognitive maps to navigate complex environments. This framework’s ability to dynamically adjust information flow within semantic space, guided by these ‘LLM Reasoning Maps,’ suggests a pathway towards AI agents capable of continuous learning and adaptation without requiring constant retraining on massive datasets.

A particularly exciting implication is the potential for resource efficiency. The current approach proves remarkably effective even with models as small as 7B parameters, significantly outperforming traditional methods in challenging multi-step reasoning scenarios. This suggests that External Hippocampus could be a game-changer for deploying powerful LLMs on devices with limited computational resources – think edge computing applications or personalized AI assistants operating directly on smartphones. By enabling smaller models to achieve comparable reasoning performance to their larger counterparts, it democratizes access to advanced AI capabilities.

Looking beyond the immediate benefits of addressing cognitive deadlocks, we can envision a future where External Hippocampus fuels entirely new applications. Could these maps be used for automated knowledge discovery? Perhaps LLMs could leverage them to proactively identify gaps in their understanding and seek out relevant information. Furthermore, the predictable intervention patterns demonstrated by this framework open doors for explainable AI – allowing us to understand *how* an LLM arrived at a particular conclusion. The ability to ‘steer’ reasoning processes offers unprecedented control and transparency.

While scaling External Hippocampus to even larger models presents challenges, the core principles of topological mapping and energy flow management remain incredibly promising. Future research could explore adaptive map construction techniques that automatically adjust granularity based on model size and task complexity. Ultimately, this framework represents a significant step towards building AI systems that are not only more intelligent but also more efficient, explainable, and adaptable – fundamentally reshaping the landscape of LLM development.

Beyond 7B: Scalability & Beyond

The External Hippocampus approach, initially demonstrated with models up to 7 billion parameters, holds significant promise for scalability beyond that threshold. While the current research focuses on smaller models due to computational constraints during map generation, the core principle of constructing and navigating semantic maps should remain applicable – albeit requiring substantial increases in hardware resources for larger LLMs. The framework’s strength lies not in modifying model weights directly but in guiding the reasoning process through external manipulation of these topological maps. As language models continue their exponential growth in size, this decoupling could become increasingly valuable, offering a pathway to improve performance without incurring the full cost of retraining massive weight matrices.

Beyond its current application in multi-step reasoning and overcoming ‘cognitive deadlock,’ LLM Reasoning Maps facilitated by the External Hippocampus framework could unlock new capabilities. Imagine using these maps for complex planning tasks requiring long-term memory and contextual awareness, such as robotic navigation or scientific discovery. The ability to visualize and directly intervene in the model’s ‘thought process’ – represented by energy flow within the semantic map – allows for debugging and targeted enhancements that are currently inaccessible with standard LLM training methods. Furthermore, this framework could be adapted to facilitate more nuanced forms of knowledge editing, allowing for the precise correction or augmentation of a model’s understanding without wholesale retraining.

A key advantage of External Hippocampus is its potential for improved resource efficiency. While map generation does require computational power, it’s significantly less than full fine-tuning or even prompt engineering across various tasks. The ability to reuse these maps across multiple instances of the same model drastically reduces operational costs and carbon footprint. Moreover, this approach allows for a form of autonomous growth – as models encounter new information, the semantic map can be incrementally updated without requiring complete retraining, potentially leading to more adaptable and efficient AI systems.

Continue reading on ByteTrending:

Discover more tech insights on ByteTrending ByteTrending.

Discover more from ByteTrending

Subscribe to get the latest posts sent to your email.